Table of content

123 SYNs to LISTEN sockets dropped

tcp SYN QUEUE and ACCEPT QUEUE队列 排查案例

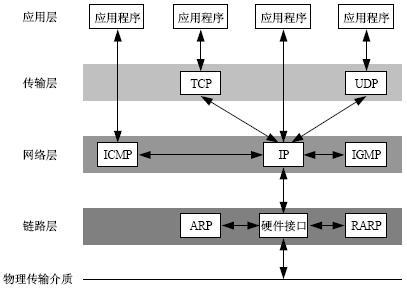

TCP/IP

要想理解socket首先得熟悉一下TCP/IP协议族, TCP/IP(Transmission Control Protocol/Internet Protocol)即传输控制协议/网间协议,定义了主机如何连入因特网及数据如何再它们之间传输的标准,

从字面意思来看TCP/IP是TCP和IP协议的合称,但实际上TCP/IP协议是指因特网整个TCP/IP协议族。不同于ISO模型的七个分层,TCP/IP协议参考模型把所有的TCP/IP系列协议归类到四个抽象层中

应用层:TFTP,HTTP,SNMP,FTP,SMTP,DNS,Telnet 等等

传输层:TCP,UDP

网络层:IP,ICMP,OSPF,EIGRP,IGMP

数据链路层:SLIP,CSLIP,PPP,MTU

每一抽象层建立在低一层提供的服务上,并且为高一层提供服务,看起来大概是这样子的

估计有兴趣打开此文的同学都对此有一定了解了,加上我也是一知半解,所以就不详细解释,有兴趣同学可以上网上搜一下资料

在TCP/IP协议中两个因特网主机通过两个路由器和对应的层连接。各主机上的应用通过一些数据通道相互执行读取操作

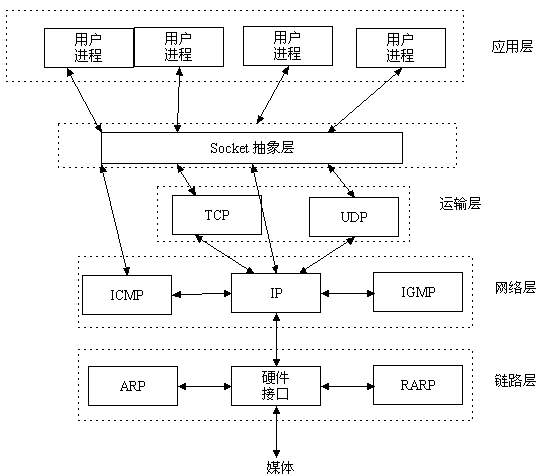

socket

我们知道两个进程如果需要进行通讯最基本的一个前提能能够唯一的标示一个进程,在本地进程通讯中我们可以使用PID来唯一标示一个进程,但PID只在本地唯一,网络中的两个进程PID冲突几率很大,这时候我们需要另辟它径了,我们知道IP层的ip地址可以唯一标示主机,而TCP层协议和端口号可以唯一标示主机的一个进程,这样我们可以利用ip地址+协议+端口号唯一标示网络中的一个进程。

能够唯一标示网络中的进程后,它们就可以利用socket进行通信了,什么是socket呢?我们经常把socket翻译为套接字,socket是在应用层和传输层之间的一个抽象层,它把TCP/IP层复杂的操作抽象为几个简单的接口供应用层调用已实现进程在网络中通信。

socket起源于UNIX,在Unix一切皆文件哲学的思想下,socket是一种"打开—读/写—关闭"模式的实现,服务器和客户端各自维护一个"文件",在建立连接打开后,可以向自己文件写入内容供对方读取或者读取对方内容,通讯结束时关闭文件。

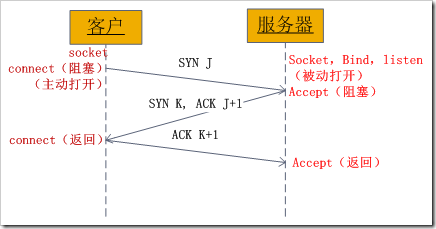

socket通信流程

socket是"打开—读/写—关闭"模式的实现,以使用TCP协议通讯的socket为例,其交互流程大概是这样子的

服务器根据地址类型(ipv4,ipv6)、socket类型、协议创建socket

服务器为socket绑定ip地址和端口号

服务器socket监听端口号请求,随时准备接收客户端发来的连接,这时候服务器的socket并没有被打开

客户端创建socket

客户端打开socket,根据服务器ip地址和端口号试图连接服务器socket

服务器socket接收到客户端socket请求,被动打开,开始接收客户端请求,直到客户端返回连接信息。这时候socket进入阻塞状态,所谓阻塞即accept()方法一直到客户端返回连接信息后才返回,开始接收下一个客户端谅解请求

客户端连接成功,向服务器发送连接状态信息

服务器accept方法返回,连接成功

客户端向socket写入信息

服务器读取信息

客户端关闭

服务器端关闭

三次握手

SYN: (同步序列编号,Synchronize Sequence Numbers)

ACK: (确认编号,Acknowledgement Number)

FIN: (结束标志,FINish)在TCP/IP协议中,TCP协议通过三次握手建立一个可靠的连接.

The procedure is named TCP three-way handshake.

TCP三次握手(创建 OPEN)

客户端发起一个和服务创建TCP链接的请求,这里是SYN(J)

服务端接受到客户端的创建请求后,返回两个信息: SYN(K) + ACK(J+1)

客户端在接受到服务端的ACK信息校验成功后(J与J+1),返回一个信息:ACK(K+1)

服务端这时接受到客户端的ACK信息校验成功后(K与K+1),不再返回信息,后面进入数据通讯阶段graceful TCP connection termination

1.2.3. Client <--> Server | TCP 3 way tcp handshake

Client --> Server | HTTP GET

Server --> Client | TCP ACK

Server --> Client | HTTP response

Server --> Client | TCP RST, ACKwe have discussed the graceful TCP connection termination.

Suppose two-person talking to each other over the phone. The voice flowing in each direction. Now before ending the call, each person makes sure that all spoken words are conveyed to another person. A final bye statement is used. In TCP it is similar, a sender says to the receiver that, I have no more data to send and request to close the stream.

At the protocol level, this is conveyed in the TCP FIN packet.

Upon receiving a close request from the TCP user. TCP layer stops sending new packets and waits for the pending TCP acks. Once all pending packets are transmitted successfully, the sender sends the TCP FIN to the receiver.

Now the other ends do the same.

In the following sections, we will see, how a protocol flow achieves TCP connection termination.

There are two packets TCP FIN and TCP FIN ACK are used for connection termination. Here we will discuss each packet in detail.

the connection needs to be close or reset immediately

99

https://www.cspsprotocol.com/tcp-connection-termination/

21:27:06.995846 IP (tos 0x0, ttl 64, id 45646, offset 0, flags [DF], proto TCP (6), length 64)

192.168.1.106.56166 > 124.192.132.54.80: Flags [S], cksum 0xa730 (correct), seq 992042666, win 65535, options [mss 1460,nop,wscale 4,nop,nop,TS val 663433143 ecr 0,sackOK,eol], length 0

21:27:07.030487 IP (tos 0x0, ttl 51, id 0, offset 0, flags [DF], proto TCP (6), length 44)

124.192.132.54.80 > 192.168.1.106.56166: Flags [S.], cksum 0xedc0 (correct), seq 2147006684, ack 992042667, win 14600, options [mss 1440], length 0

21:27:07.030527 IP (tos 0x0, ttl 64, id 59119, offset 0, flags [DF], proto TCP (6), length 40)

192.168.1.106.56166 > 124.192.132.54.80: Flags [.], cksum 0x3e72 (correct), ack 2147006685, win 65535, length 0最基本也是最重要的信息就是数据报的源地址/端口和目的地址/端口,上面的例子第一条数据报中,源地址 ip 是 192.168.1.106,源端口是 56166,目的地址是 124.192.132.54,目的端口是 80。> 符号代表数据的方向。

此外,上面的三条数据还是 tcp 协议的三次握手过程,第一条就是 SYN 报文,这个可以通过 Flags [S] 看出。下面是常见的 TCP 报文的 Flags:

Client --> Server [SYN]

Server --> Client [SYN, ACK]

Client --> Server [ACK]

.....

Server --> Client [FIN, ACK]

Client --> Server [ACK]

Client --> Server [TCP Segment of a reassembled PDU] (I don't know what this means)

Server --> Client [RST]

[S]- SYN

[S.] - SYN-ACK

[.] - ACK

[F]- FYN

[F.] - FYN-ACK

[R] - RST

[R.] - RST-ACK

[S] : SYN(开始连接)

[.] : 没有 Flag

[P] : PSH(推送数据)

[F] : FIN (结束连接)

[R] : RST(重置连接)第二条数据的 [S.] 表示 SYN-ACK,就是 SYN 报文的应答报文。

see:Transmission Control Protocol (TCP) Parameters

netstat -s | grep -i listen

SYNs to LISTEN sockets dropped

5332 times the listen queue of a socket overflowed

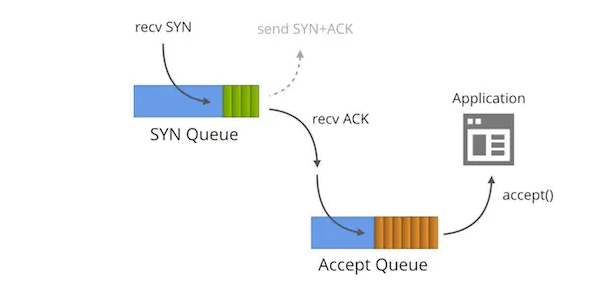

7503 SYNs to LISTEN sockets droppedtcp 两个队列SYN QUEUE and ACCEPT QUEUE

这篇文章讲得比较清楚:SYN packet handling in the wild

Linux内核协议栈为一个TCP连接管理使用两个队列,一个是半链接队列SYN QUEUE(用来保存处于SYN_SENT和SYN_RECV状态的请求),一个是ACCEPT队列 ACCEPT QUEUE(用来保存处于established状态,但是应用层没有调用accept取走的请求)。

当client 建立连接时发送SYN包到server端,server收到之后如果SYN QUEUE队列已满,直接丢弃不回ACK。客户端超时后经过3秒、9秒…的间隔时候不断重发SYN包。

当server的SYN QUEUE没有满时,server会回复SYN+ACK给client,如果client收到请求,则将状态修改为ESTABLISHED,并发送ACK给server。

server收到ACK,将状态修改为ESTABLISHED,并把该请求从SYN QUEUE中放到ACCEPT QUEUE。

SYN QUEUE队列

用于保存半连接状态的请求,其大小通过/proc/sys/net/ipv4/tcp_max_syn_backlog指定。

1、client发送SYN到server,将状态修改为SYN_SEND,如果server收到请求,则将状态修改为SYN_RCVD,并把该请求放到syns queue队列中。

2、server回复SYN+ACK给client,如果client收到请求,则将状态修改为ESTABLISHED,并发送ACK给server。

3、server收到ACK,将状态修改为ESTABLISHED,并把该请求从syns queue中放到accept queue。

非正常关闭的场景

1.the application process exceeds the limit of max file descriptors

When a Client tries TCP connect, even if the server is not currently calling accept, the connection will pass. This will happen if server has called 'listen' function and it will keep accepting the connections till backlog limit is reached.

But, if the application process exceeds the limit of max file descriptors it can use, then when server calls accept, then it realizes that there are no file descriptors available to be allocated for the socket and fails the accept call and the TCP connection sending a FIN to other side.

2.This can happen when a 50x error is encountered

an upstream server may also elect to close the connection. This can happen when a 50x error is encountered. A packet capture can show you if this is the case

3.when the client close the connection to the nginx, the nginx will close the relative upstream connection

If I turn ssl off, the connection looks like:

1. client connects to nginx

2. nginx connects to upstream

3. ...

4. client sends FIN/ACK to nginx

5. nginx sends FIN/ACK to upstream, and to client

With ssl on:

1. client connects to nginx

2. nginx connects to upstream

3. ...

4. client sends FIN/ACK to nginx

5. nginx waits 60 seconds

6. nginx sends FIN/ACK to upstream

And after analysis of tcp transport info, we found sometimes nginx will send a 'RST' immediately after it send FIN to upstream server. Final conclusion, the reason of this behavior we think is when the client close the connection to the nginx, the nginx will close the relative upstream connection and not wait the upstream to execute the rest task.

So according to the nginx document, we aad the config proxy_ignore_client_abort on to the nginx config file. Reference: http://nginx.org/

proxy_ignore_client_abort

默认 proxy_ignore_client_abort 是关闭的,此时在请求过程中如果客户端端主动关闭请求或者客户端网络断掉,那么 Nginx 会记录 499,同时 request_time 是 「后端已经处理」的时间,而 upstream_response_time 为 “-“ (已验证)。

如果使用了 proxy_ignore_client_abort on ;

那么客户端主动断掉连接之后,Nginx 会等待后端处理完(或者超时),然后 记录 「后端的返回信息」 到日志。所以,如果后端 返回 200, 就记录 200 ;如果后端放回 5XX ,那么就记录 5XX 。

如果超时(默认60s,可以用 proxy_read_timeout 设置),Nginx 会主动断开连接,记录 504。

关于 RST 消息

连接重置消息,用于连接的【异常关闭】。

下面简单罗列集中可能触发 RST 连接关闭的情景:

1、服务端接收到自身不存在端口的连接请求

2、主动使用 RST 关闭,替代正常的四次挥手 FIN 消息关闭(参考:TCP连接性能指标之TCP关闭过程(四次挥手)),主要用于特殊优化提升效率使用。

3、客户端或者服务端异常,无法继续正常的连接处理,发送 RST 终止连接操作。

4、处理 TCP 游离包信息。

5、长期未收到对方确认报文,经过一定时间或者重传尝试后,发送 RST 终止连接。

在linux系统内核中维护了两个队列:syns queue和accept queue

syns queue

用于保存半连接状态的请求,其大小通过/proc/sys/net/ipv4/tcp_max_syn_backlog指定,一般默认值是512,不过这个设置有效的前提是系统的syncookies功能被禁用。互联网常见的TCP SYN FLOOD恶意DOS攻击方式就是建立大量的半连接状态的请求,然后丢弃,导致syns queue不能保存其它正常的请求。

accept queue

用于保存全连接状态的请求,其大小通过/proc/sys/net/core/somaxconn指定,在使用listen函数时,内核会根据传入的backlog参数与系统参数somaxconn,取二者的较小值。

如果accpet queue队列满了,server将发送一个ECONNREFUSED错误信息Connection refused到client。

通过netstat -s可以查看被SYN QUEUE队列丢弃的数据

# netstat -s

Ip:

Forwarding: 2

11267061 total packets received

4 with invalid addresses

0 forwarded

0 incoming packets discarded

11267057 incoming packets delivered

12591863 requests sent out

Icmp:

2805 ICMP messages received

4 input ICMP message failed

ICMP input histogram:

destination unreachable: 20

echo requests: 2783

timestamp request: 2

2790 ICMP messages sent

0 ICMP messages failed

ICMP output histogram:

destination unreachable: 5

echo replies: 2783

timestamp replies: 2

IcmpMsg:

InType3: 20

InType8: 2783

InType13: 2

OutType0: 2783

OutType3: 5

OutType14: 2

Tcp:

124905 active connection openings

127868 passive connection openings

10758 failed connection attempts

2702 connection resets received

56 connections established

11233471 segments received

13193140 segments sent out

826800 segments retransmitted

82 bad segments received

21354 resets sent

InCsumErrors: 2

Udp:

30776 packets received

5 packets to unknown port received

0 packet receive errors

32809 packets sent

0 receive buffer errors

0 send buffer errors

UdpLite:

TcpExt:

292 SYN cookies sent

349 SYN cookies received

3 invalid SYN cookies received

9742 resets received for embryonic SYN_RECV sockets

32754 TCP sockets finished time wait in fast timer

4 packetes rejected in established connections because of timestamp

192843 delayed acks sent

80 delayed acks further delayed because of locked socket

Quick ack mode was activated 14579 times

151 times the listen queue of a socket overflowed

151 SYNs to LISTEN sockets dropped

2758725 packet headers predicted

1706313 acknowledgments not containing data payload received

3258231 predicted acknowledgments

TCPSackRecovery: 196636

Detected reordering 1127 times using SACK

Detected reordering 3 times using reno fast retransmit

15 congestion windows fully recovered without slow start

TCPDSACKUndo: 2

158 congestion windows recovered without slow start after partial ack

TCPLostRetransmit: 13204

TCPSackFailures: 448

6407 timeouts in loss state

711374 fast retransmits

67679 retransmits in slow start

TCPTimeouts: 41378

TCPLossProbes: 116050

TCPLossProbeRecovery: 429

TCPSackRecoveryFail: 21931

TCPDSACKOldSent: 14616

TCPDSACKOfoSent: 423

TCPDSACKRecv: 1368

TCPDSACKOfoRecv: 2

113 connections reset due to unexpected data

11 connections reset due to early user close

52 connections aborted due to timeout

TCPDSACKIgnoredOld: 2

TCPDSACKIgnoredNoUndo: 1190

TCPSpuriousRTOs: 11

TCPSackShifted: 6995

TCPSackMerged: 54357

TCPSackShiftFallback: 204505

TCPTimeWaitOverflow: 80015

TCPReqQFullDoCookies: 292

TCPRcvCoalesce: 252342

TCPOFOQueue: 109422

TCPOFOMerge: 404

TCPChallengeACK: 102

TCPSYNChallenge: 80

TCPFromZeroWindowAdv: 12867

TCPToZeroWindowAdv: 12867

TCPWantZeroWindowAdv: 499

TCPSynRetrans: 3582

TCPOrigDataSent: 10693977

TCPHystartTrainDetect: 3

TCPHystartTrainCwnd: 368

TCPACKSkippedSynRecv: 19

TCPACKSkippedSeq: 152

TCPACKSkippedTimeWait: 1

TCPACKSkippedChallenge: 4

TCPWinProbe: 497

TCPKeepAlive: 3029

IpExt:

InOctets: 63964564217

OutOctets: 70118719208

InNoECTPkts: 11762696

https://www.ipcpu.com/2016/10/tcp-handshake-backlog/

https://blog.csdn.net/whatday/article/details/107740002

Troubleshooting SYNs to LISTEN sockets dropped message from netstat

Elevated number of dropped TCP connections to a listening remote network socket.

The symptom:

“SYNs to LISTEN sockets dropped” increments at a high rate:

Obtaining the baseline:

- Starting from a lower level, let's check the size of the transmit queue on the network interface and make sure there aren’t any collisions:

- Next, let’s check to see if the interface is dropping packets due to the transmit queue:

- Finally, check for any fragmentation problems:

- Moving up the stack, print the Accept Queue sizes for the listening service. Recv-Q shows the number of sockets in the Accept Queue, and Send-Q shows the backlog parameter:

- Nothing really in the accept queue, let’s check how many connections are in SYN-RECV state for the receiving process in question:

- Connections are moving to ESTABLISHED pretty quickly. Let’s make sure we have enough file descriptors available (the current number of allocated file handles, the number of unused but allocated file handles, the system-wide maximum):

- Check for half-closed connections, waiting on FIN,ACK and total established connections:

- No concerns here, based on the total number of connections. Let’s check the number of concurrent (NEW) connections:

- Current rate is at 180 NEW connections per second. Observing the rate on a single node for a 24 hours period, we peak at about 250 connections per second. Checking the CPU and memory utilization shows a pretty idle system even during peak send times:

- Finally, checking the counter for dropped SYN packets, shows an ever increasing number at a rate of about 20/sec:

- The main reason for dropping SYN packets is when the SYN Queue is getting full. I was not able to see that in any of the above diagnostics. For better visibility let’s install some kernel hooks with SystemTap to print details on specifically what connections suffer due to Accept Queue overflow. This should help in identifying periodically hung applications that fail to accept() connections fast enough:

- Unfortunately running the kernel hook for 24 hours did not yield any results. The SYN and ACCEPT queues were nearly empty, though the “SYNs to LISTEN sockets dropped” issue persisted.

After each incremental change, I measured the rate of SYN errors and checked the SYN and Accept queue utilizations.

- Increased the number of incoming connections backlog queue. This queue sets the maximum number of packets, queued on the INPUT side:

- Increased the overall TCP memory, in pages (number of guaranteed pages for TCP, the threshold at which TCP should start to conserve pages, maximum number of allocatable pages):

- Increased the core system socket read and write buffers absolute max, in bytes. The applications cannot request more than this value:

- Increased the system socket read and write buffers (min, default and max size in bytes):

- Ensured TCP window scaling is enabled:

- Updated how many times to retry SYN connections. With the default the final timeout for an active TCP connection attempt will happen after 127 seconds:

- And arguably most importantly I’ve increased the limit of the socket listen() backlog, the maximum value that net.ipv4.tcp_max_syn_backlog can take. The kernel documentation states that if this limit is reached SYN packets will be dropped:

- Even though that huge number got accepted (the default varies by kernel version, from 128 to 4096) the queue can’t be more than 65535 it seems:

- Increased the Listener queue length for unacknowledged SYN_RECV connection attempts. A SYN_RECV request socket consumes about 304 bytes of memory:

- Checking to see how many connections are in SYN_RECV state after the above change:

- Increased the number of times SYNACKs for a passive TCP connection attempt will be retransmitted. WIth the default the final timeout for a passive TCP connection will happen after 63seconds:

- Finally, disabling the reuse of TCP connections (at the expense of increased number of TIME_WAIT connections and about 120MB of extra memory usage) yielded the best result, dropped SYN packets went down to about 3 per 15 minutes!

#netstat -antp

tcp 0 0 127.0.0.1:27017 127.0.0.1:36022 ESTABLISHED 480/mongod

tcp 0 0 172.19.23.189:34288 100.100.36.108:443 TIME_WAIT -

tcp 0 0 127.0.0.1:53150 127.0.0.1:4999 TIME_WAIT -

tcp 0 0 127.0.0.1:36362 127.0.0.1:6700 TIME_WAIT -

tcp 0 0 127.0.0.1:6379 127.0.0.1:47924 ESTABLISHED 18736/redis-server

tcp 0 0 127.0.0.1:6379 127.0.0.1:58054 ESTABLISHED 18736/redis-server

查看本机某个端口的当前连接状态

#netstat -antp | grep 5999

tcp 0 0 0.0.0.0:5999 0.0.0.0:* LISTEN 8153/dotnet

tcp 0 0 172.219.23.189:5999 48.244.191.43:25616 ESTABLISHED 8153/dotnet

socket编程API

前面提到socket是"打开—读/写—关闭"模式的实现,简单了解一下socket提供了哪些API供应用程序使用,还是以TCP协议为例,看看Unix下的socket API,其它语言都很类似(PHP甚至名字都几乎一样),这里我就简单解释一下方法作用和参数,具体使用有兴趣同学可以看看博客参考中的链接或者上网搜索

int socket(int domain, int type, int protocol);

根据指定的地址族、数据类型和协议来分配一个socket的描述字及其所用的资源。

domain:协议族,常用的有AF_INET、AF_INET6、AF_LOCAL、AF_ROUTE其中AF_INET代表使用ipv4地址

type:socket类型,常用的socket类型有,SOCK_STREAM、SOCK_DGRAM、SOCK_RAW、SOCK_PACKET、SOCK_SEQPACKET等

protocol:协议。常用的协议有,IPPROTO_TCP、IPPTOTO_UDP、IPPROTO_SCTP、IPPROTO_TIPC等

int bind(int sockfd, const struct sockaddr *addr, socklen_t addrlen);

把一个地址族中的特定地址赋给socket

sockfd:socket描述字,也就是socket引用

addr:要绑定给sockfd的协议地址

addrlen:地址的长度

通常服务器在启动的时候都会绑定一个众所周知的地址(如ip地址+端口号),用于提供服务,客户就可以通过它来接连服务器;而客户端就不用指定,有系统自动分配一个端口号和自身的ip地址组合。这就是为什么通常服务器端在listen之前会调用bind(),而客户端就不会调用,而是在connect()时由系统随机生成一个。

int listen(int sockfd, int backlog);

监听socket

sockfd:要监听的socket描述字

backlog:相应socket可以排队的最大连接个数

int connect(int sockfd, const struct sockaddr *addr, socklen_t addrlen);

连接某个socket

sockfd:客户端的socket描述字

addr:服务器的socket地址

addrlen:socket地址的长度

int accept(int sockfd, struct sockaddr *addr, socklen_t *addrlen);

TCP服务器监听到客户端请求之后,调用accept()函数取接收请求

sockfd:服务器的socket描述字

addr:客户端的socket地址

addrlen:socket地址的长度

ssize_t read(int fd, void *buf, size_t count);

读取socket内容

fd:socket描述字

buf:缓冲区

count:缓冲区长度

ssize_t write(int fd, const void *buf, size_t count);

向socket写入内容,其实就是发送内容

fd:socket描述字

buf:缓冲区

count:缓冲区长度

int close(int fd);

socket标记为以关闭 ,使相应socket描述字的引用计数-1,当引用计数为0的时候,触发TCP客户端向服务器发送终止连接请求。

参考

https://users.cs.northwestern.edu/~agupta/cs340/project2/TCPIP_State_Transition_Diagram.pdf

PS. 有同学看完后发现没有demo示例,参考中的示例已经很不错了,我就不班门弄斧了,而且我用C#实现了一个websocket server,接下来的博客中会有介绍。另外由于刚刚实际接触socket,文中谬误较多,还望大家批评指正,文章内容主要参考上面两个博文,图片全部来源于网络,在百度图片搜索得来,无法注明第一源地址,如有版权问题请站内信联系,第一时间处理。

TCP Protocol explanation